Zero-Shot Reinforcement Learning from Low Quality Data

NeurIPS 2024

Scott Jeen\(^{\alpha}\), Tom Bewley\(^{\beta}\) & Jonathan M. Cullen\(^{\alpha}\)

\(^{\alpha}\) University of Cambridge \(^{\beta}\) University of Bristol

Motivation

- Training policies to (zero-shot) generalise to unseen tasks in an environment is hard! [1]

- Behaviour Foundation Models (BFMs) based on forward-backward representations (FB) [2] and universal successor features (USF) [3], provide principled mechanisms for performing zero-shot task generalisation

- However, BFMs assumed access to idealised (large & diverse) pre-training datasets that we can’t expect for real problems

- Can we pre-train BFMs on realistic (small & narrow) datasets?

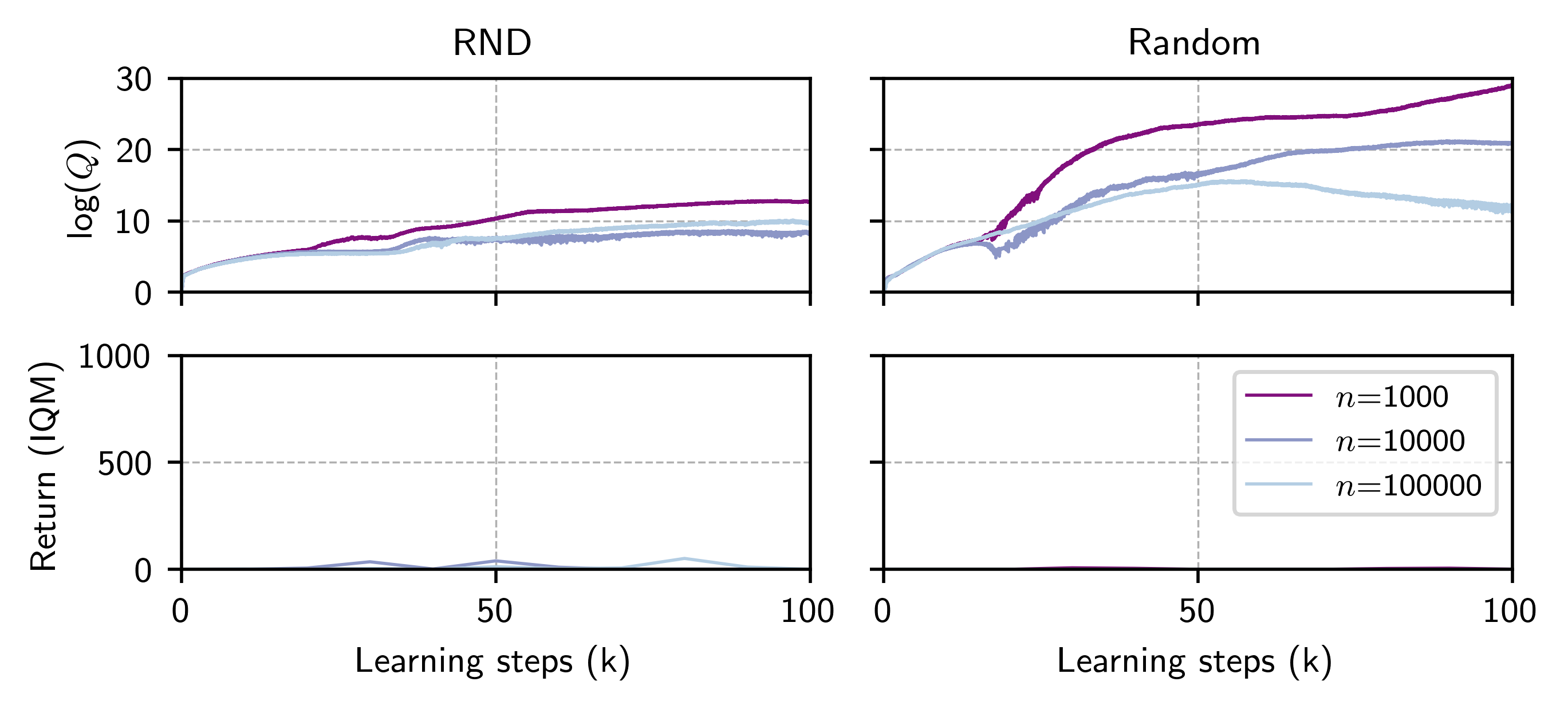

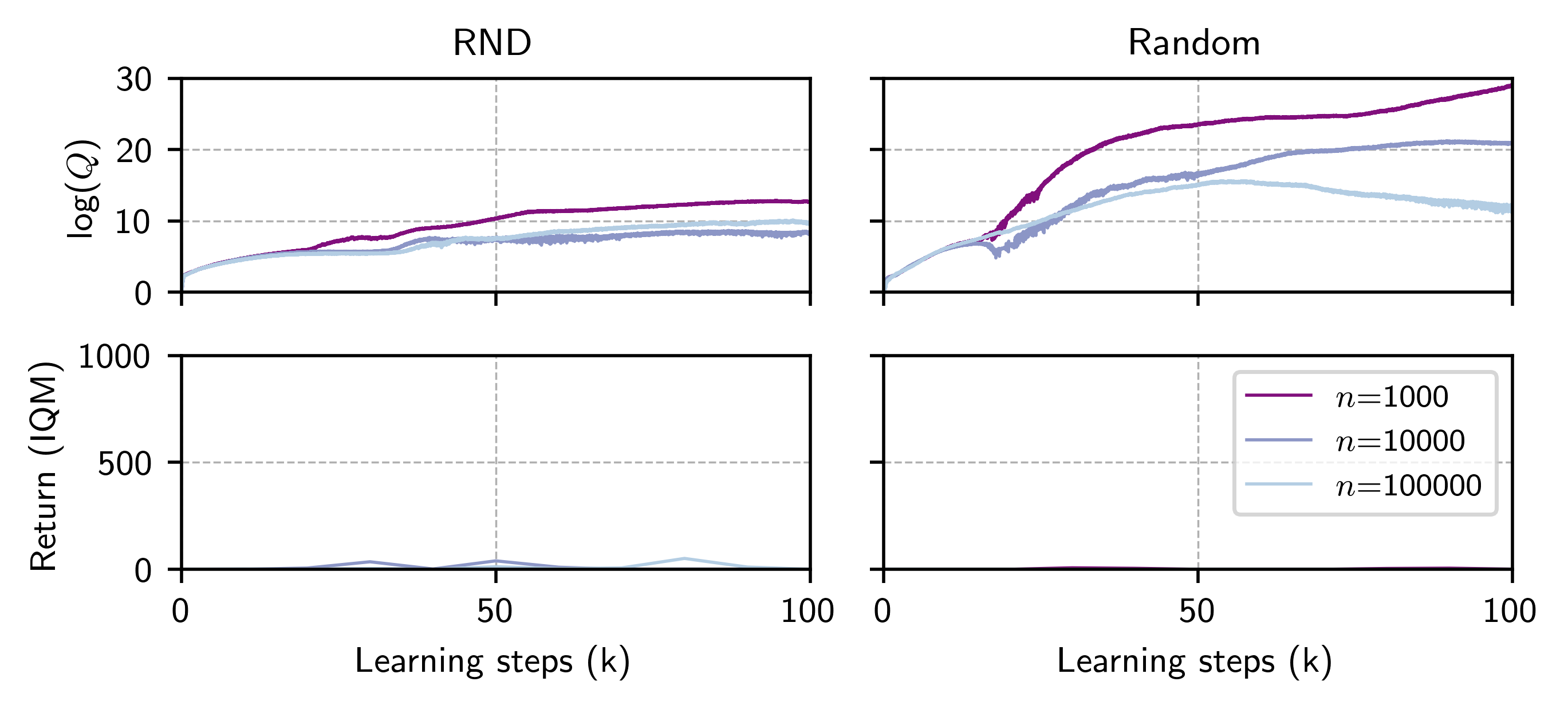

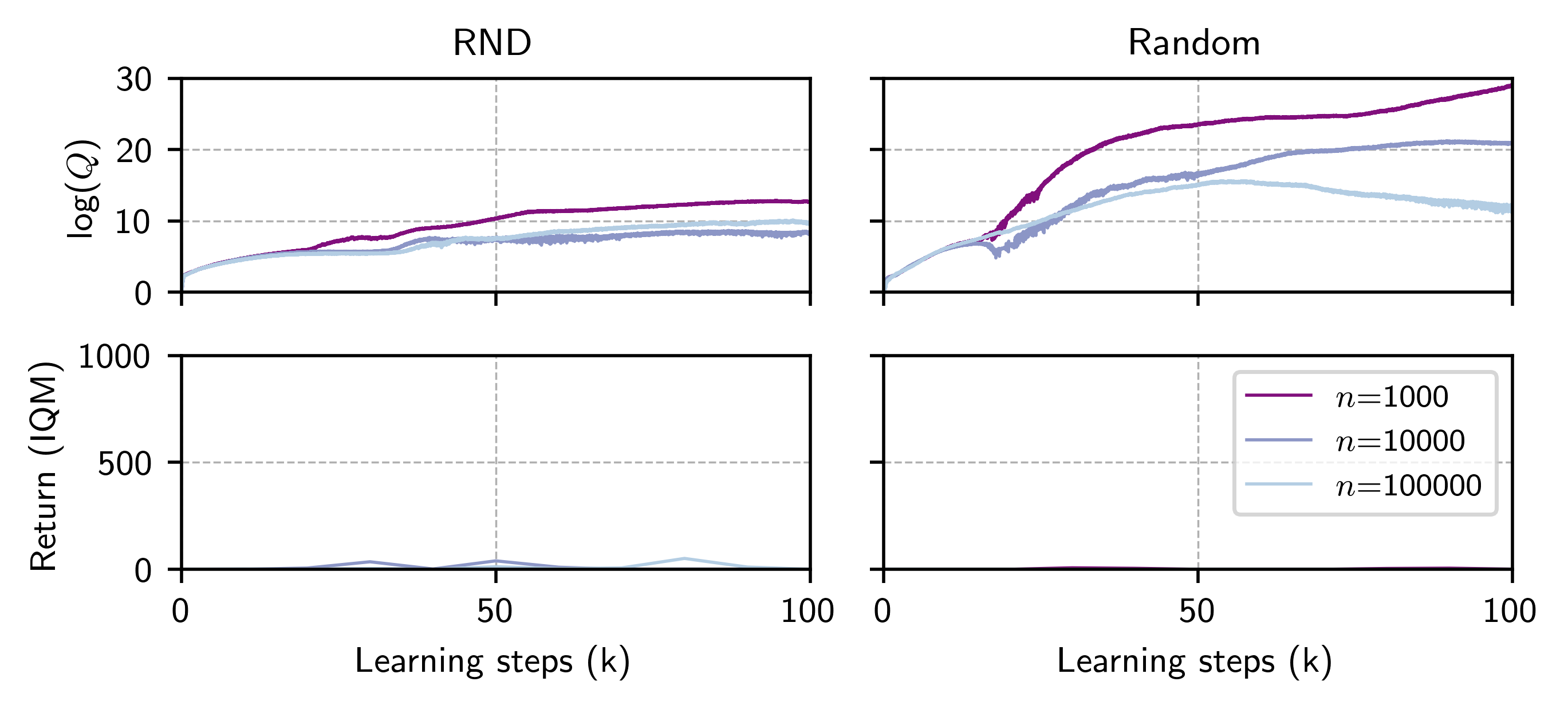

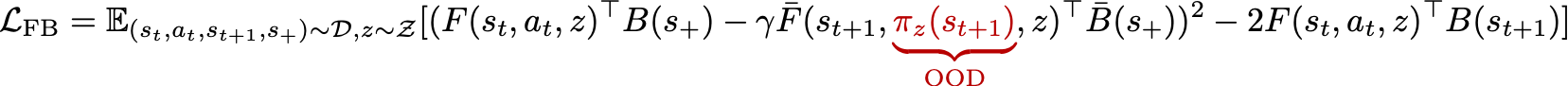

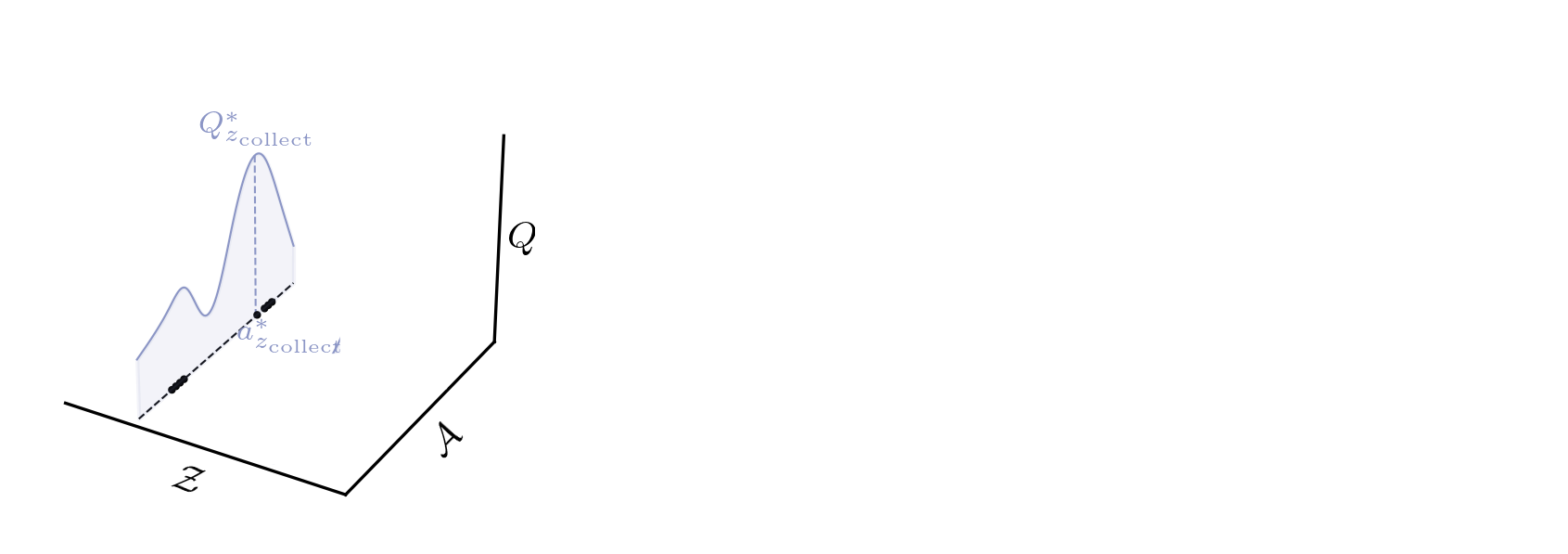

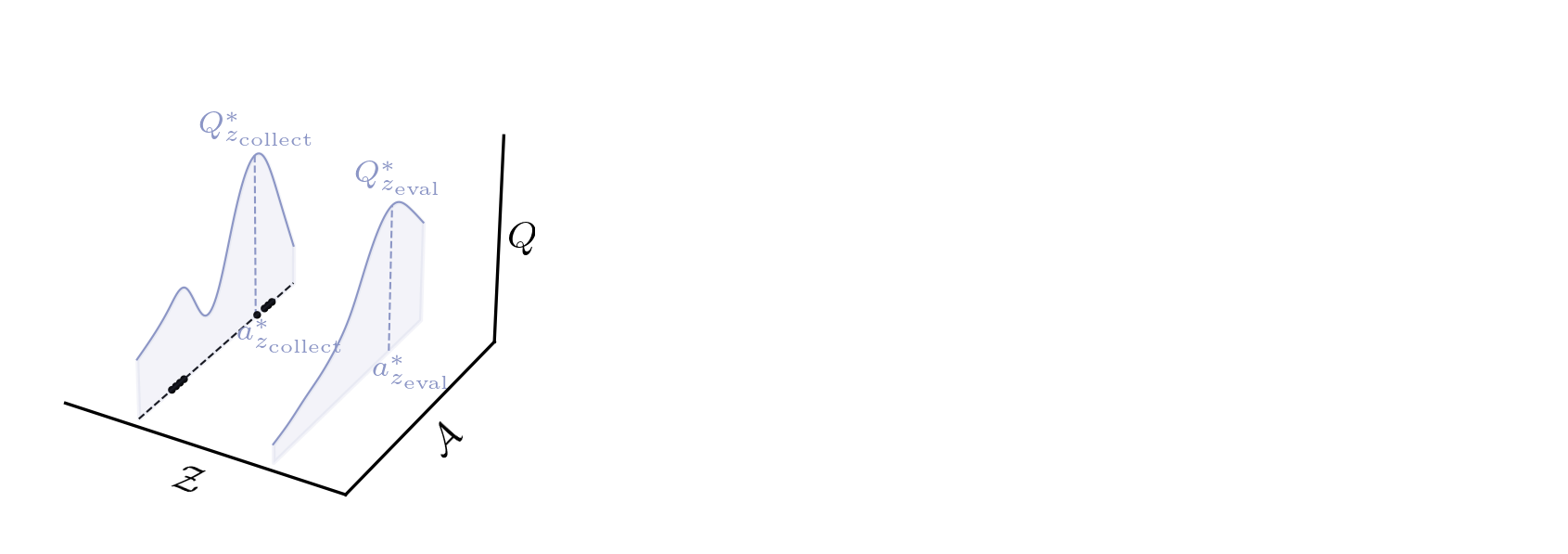

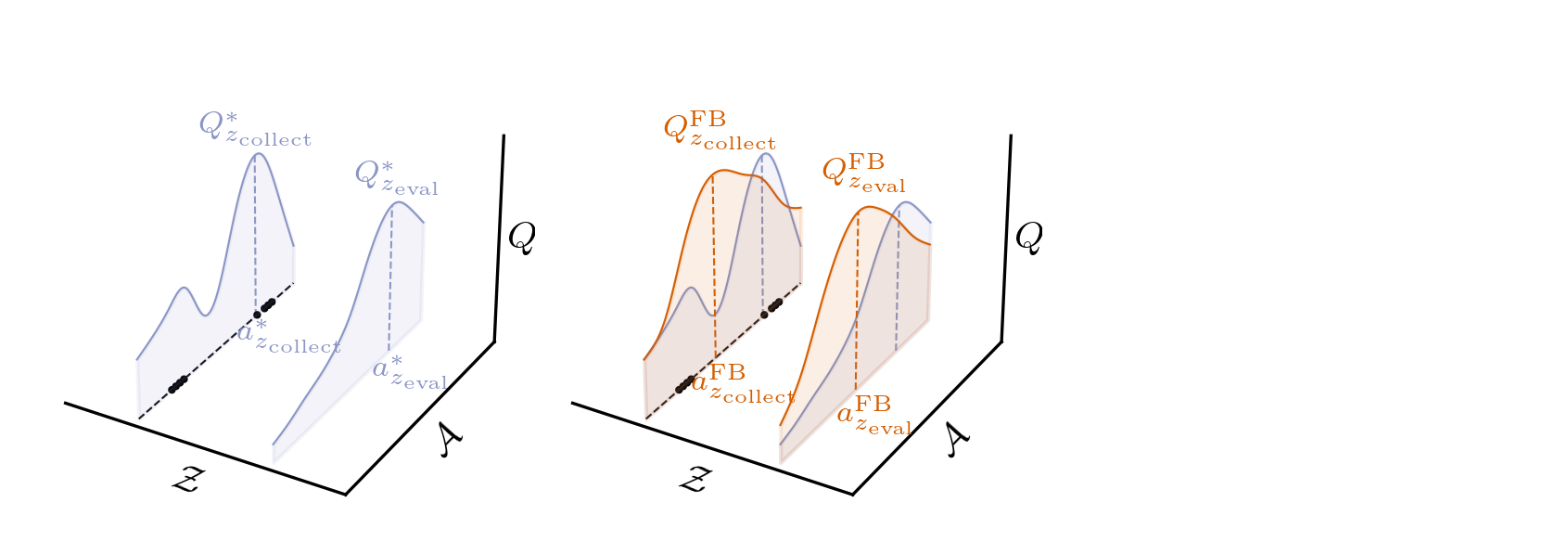

Out-of-distribution Value Overestimation in BFMs

Out-of-distribution Value Overestimation in BFMs

Out-of-distribution Value Overestimation in BFMs

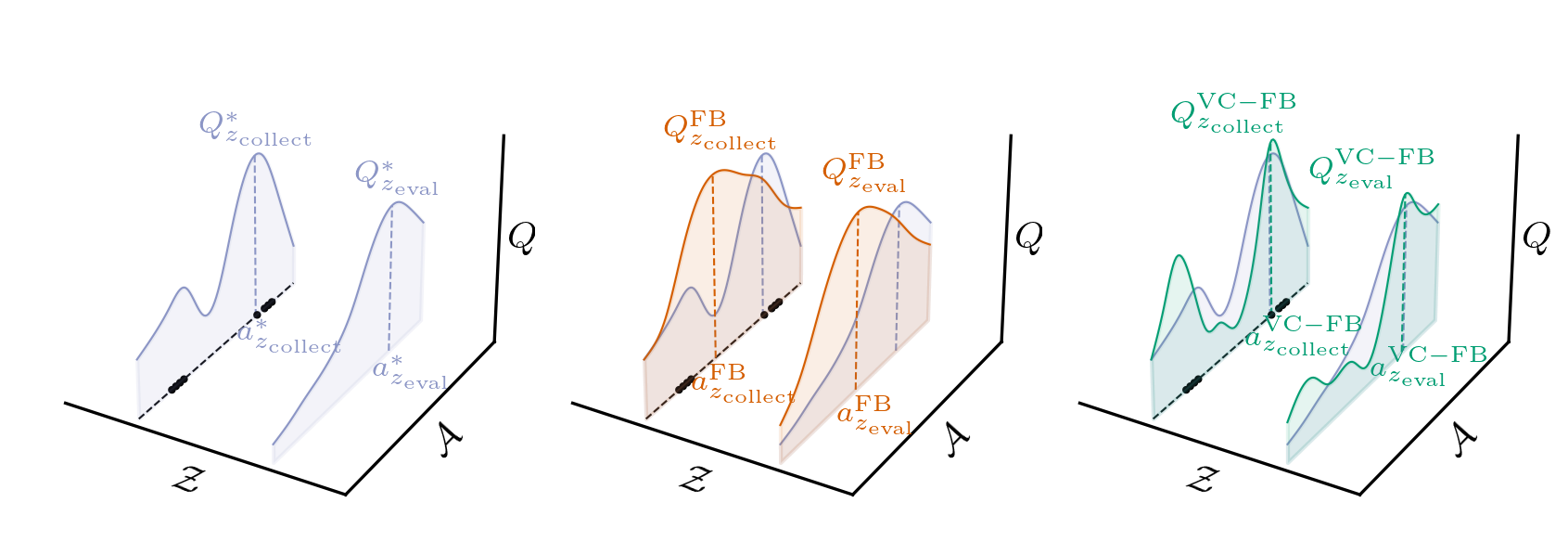

Conservative BFMs

Conservative BFMs

Conservative BFMs

Conservative BFMs

Conservative BFMs

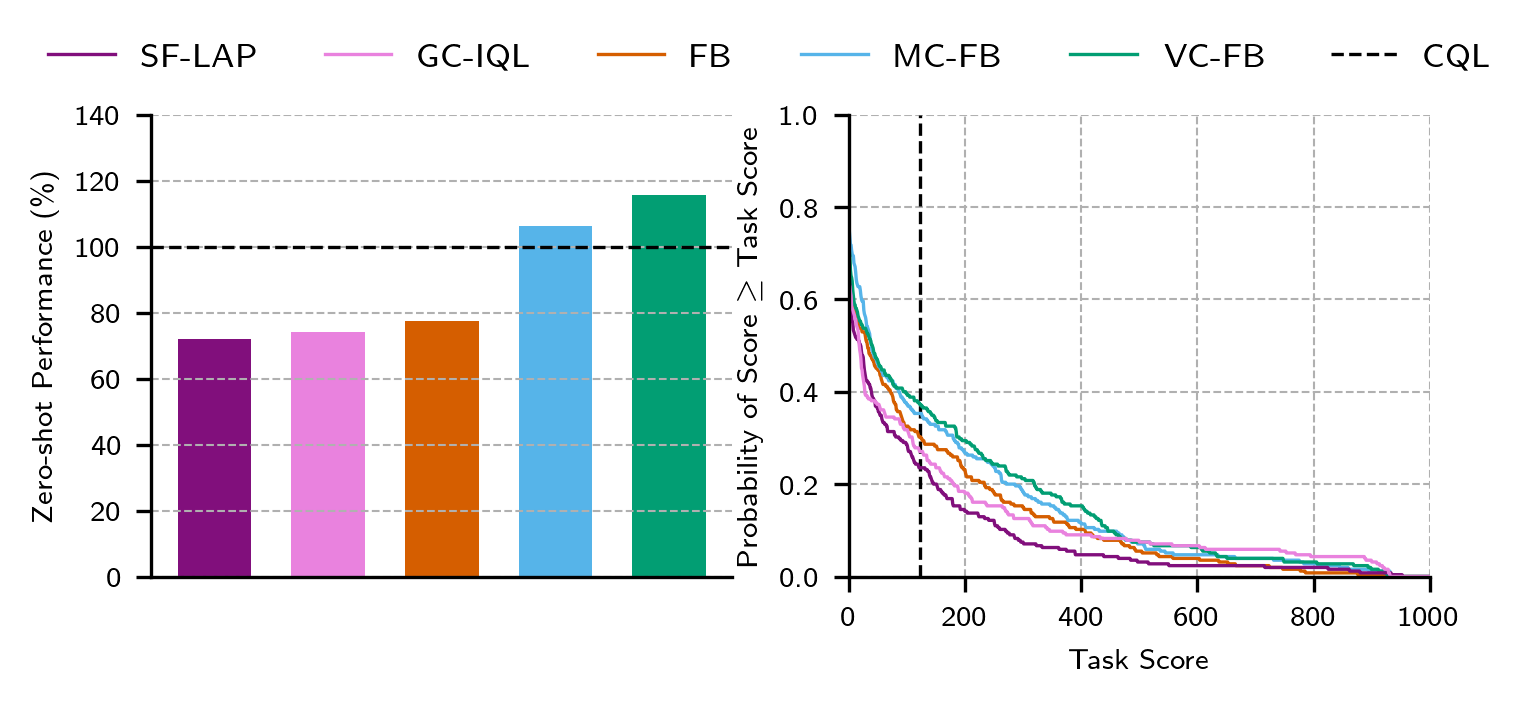

ExORL Results

Baselines

- Zero-shot RL: FB, SF-LAP [5]

- Goal-conditioned RL: GC-IQL [6]

- Offline RL: CQL [7]

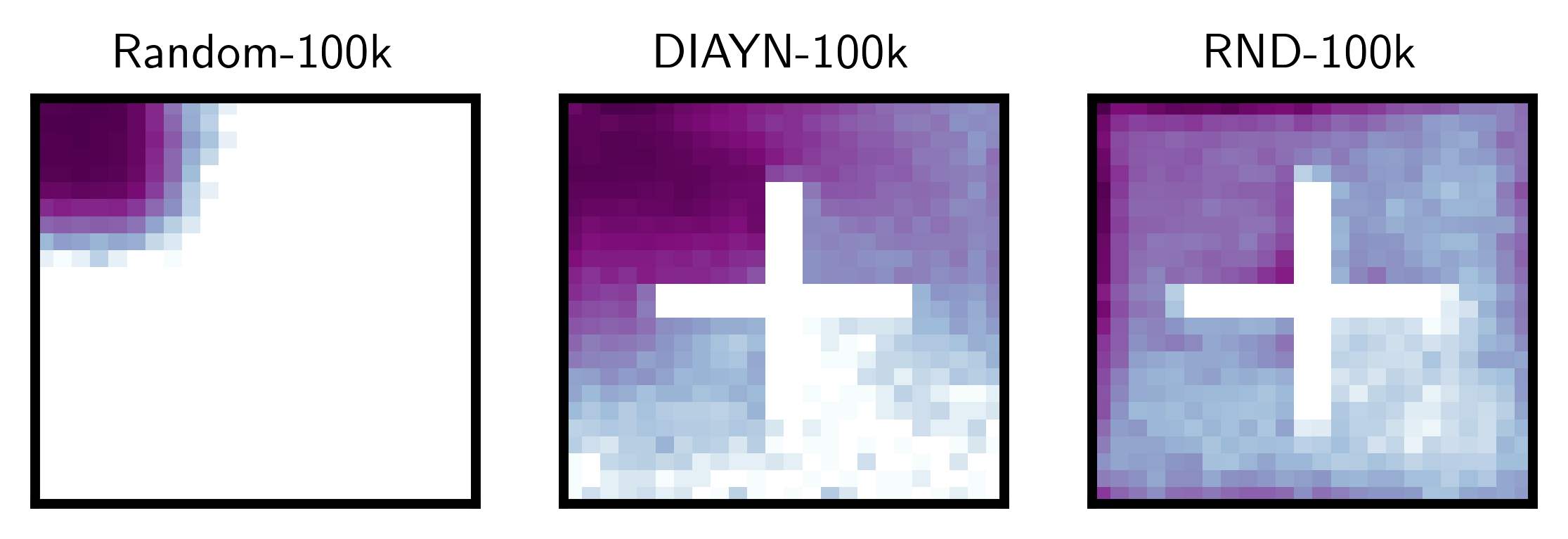

Datasets

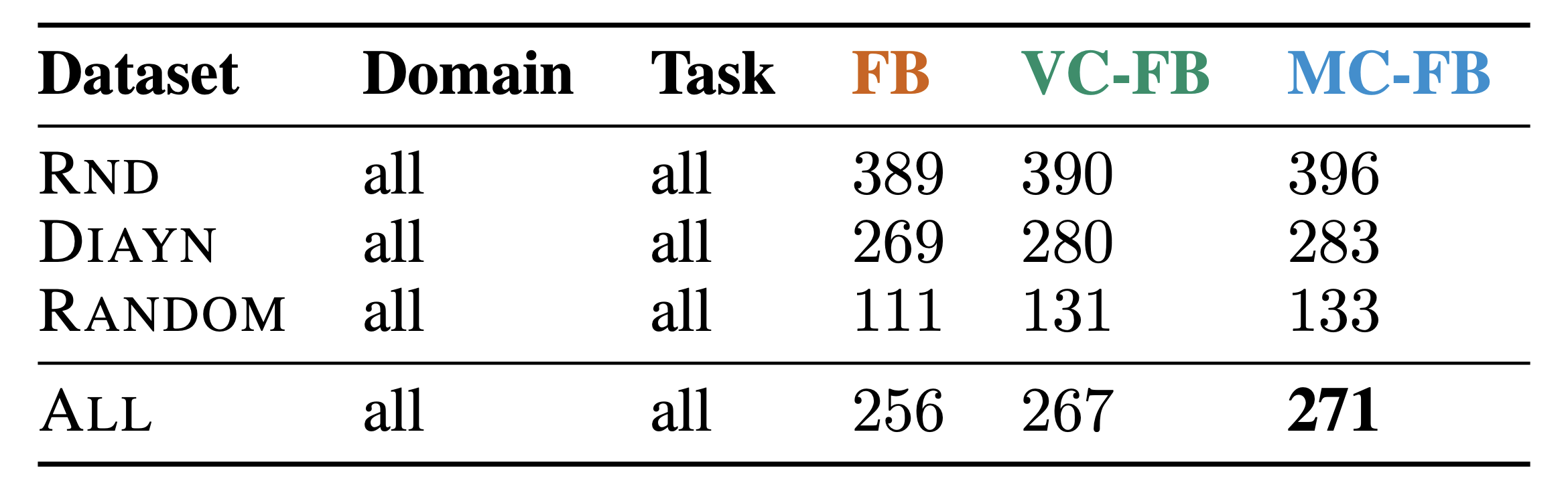

ExORL Results

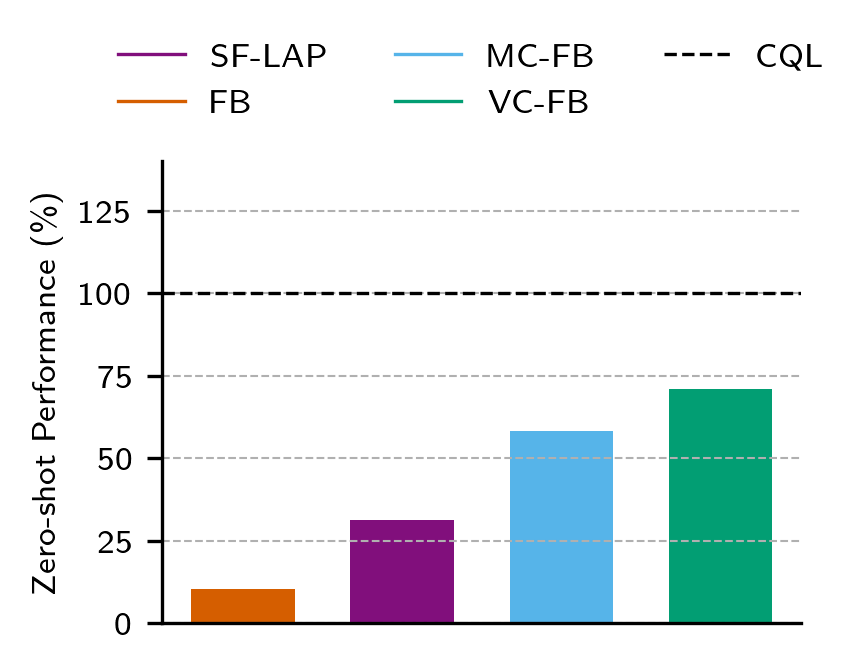

D4RL Results

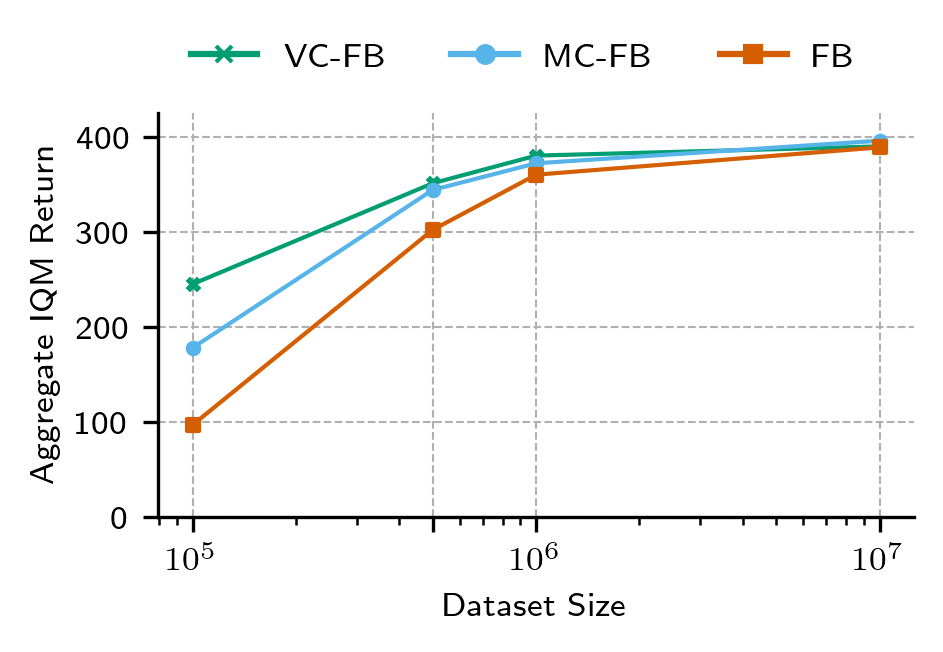

Performance on Idealised Datasets is Unaffected

Conclusions

- Like standard offline RL methods, BFMs suffer from the distribution shift

- As a resolution, we introduce Conservative BFMs

- Conservative BFMs considerably outperform standard BFMs on low-quality datasets

- Conservative BFMs do not compromise performance on idealised datasets